Submitted on Tuesday, 4/2/2024, at 6:21 PM

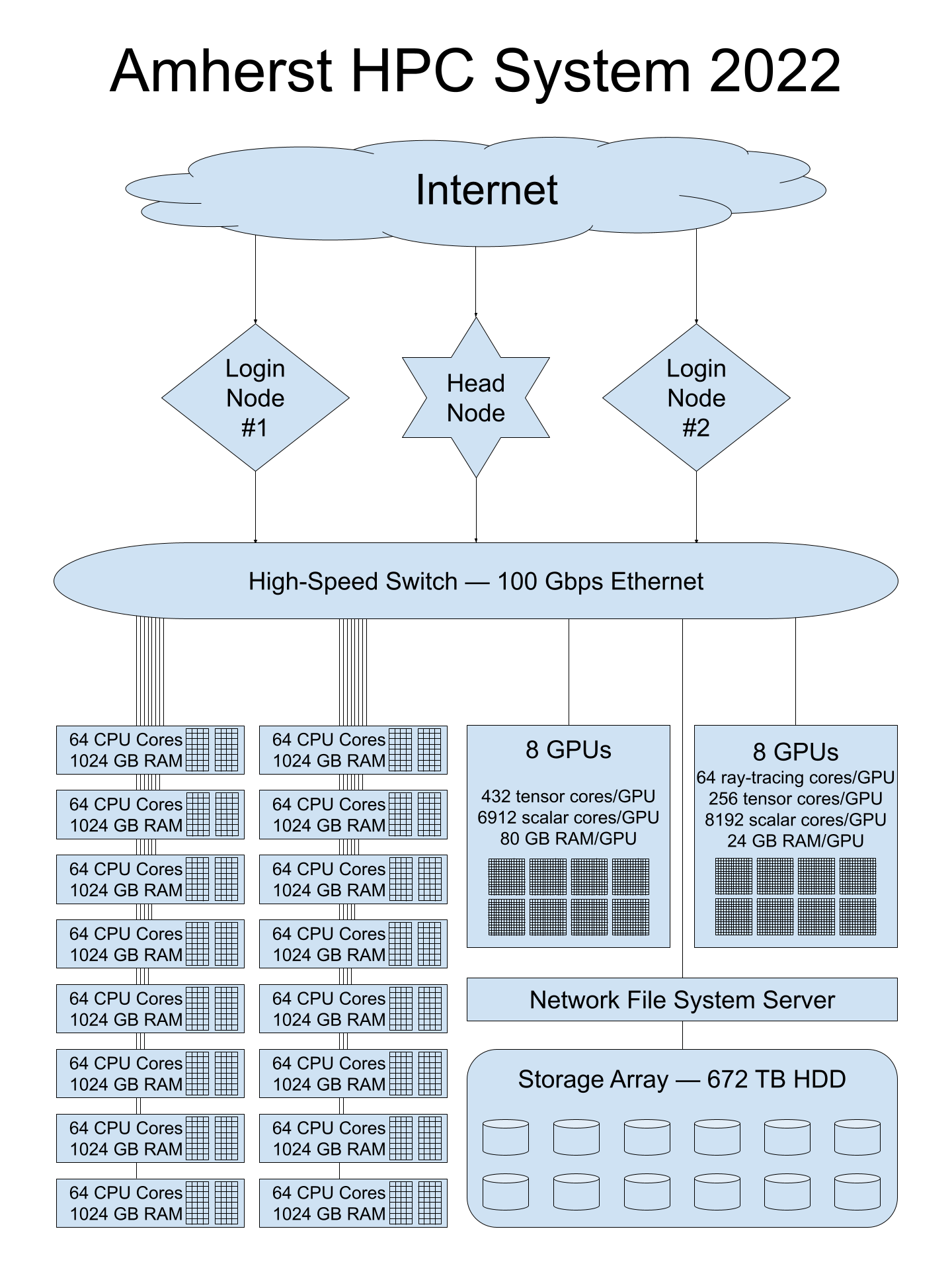

The Amherst College High-Performance Computing System, residing in a single rack at the MGHPCC.

- 16 Dell PowerEdge R6525 CPU Servers:

- 64 2.6-GHz AMD CPU cores per server, 2 threads per core

(1024 cores total, 2048 threads total) - 16 GB RAM/core

- 64 2.6-GHz AMD CPU cores per server, 2 threads per core

- 1 NVidia DGX A100 GPU Server:

- 8 GPUs

- 80 GB RAM/GPU

- 432 Tensor cores/GPU

- 6,912 CUDA cores/GPU

- Front end:

- 128 2.25-GHz AMD CPU cores, 2 threads per core

- 8 GB RAM/core

- 1 NVidia RTX A5000 GPU Server:

- 8 GPUs

- 24 GB RAM/GPU

- 64 ray-tracing cores/GPU

- 256 tensor cores/GPU

- 8,192 CUDA cores/GPU

- Front end:

- 64 2.6-GHz AMD CPU cores, 2 threads per core

- 8 GB RAM/core

- Network File System Storage:

- 1 Dell PowerEdge R7515 Server

- 1 Dell ME-5084 Disk Array: 42 * 16 TB HDD (672 TB Total)

- 1 Head Node, 2 Login Nodes:

- Dell PowerEdge R6515 Servers

- 1 Mellanox MSN2700 100 Gbps Ethernet Network Switch

- 1 Dell S-3048 Management Switch

Image

Tags:

HPC

computing cluster

cpu

gpu