Background

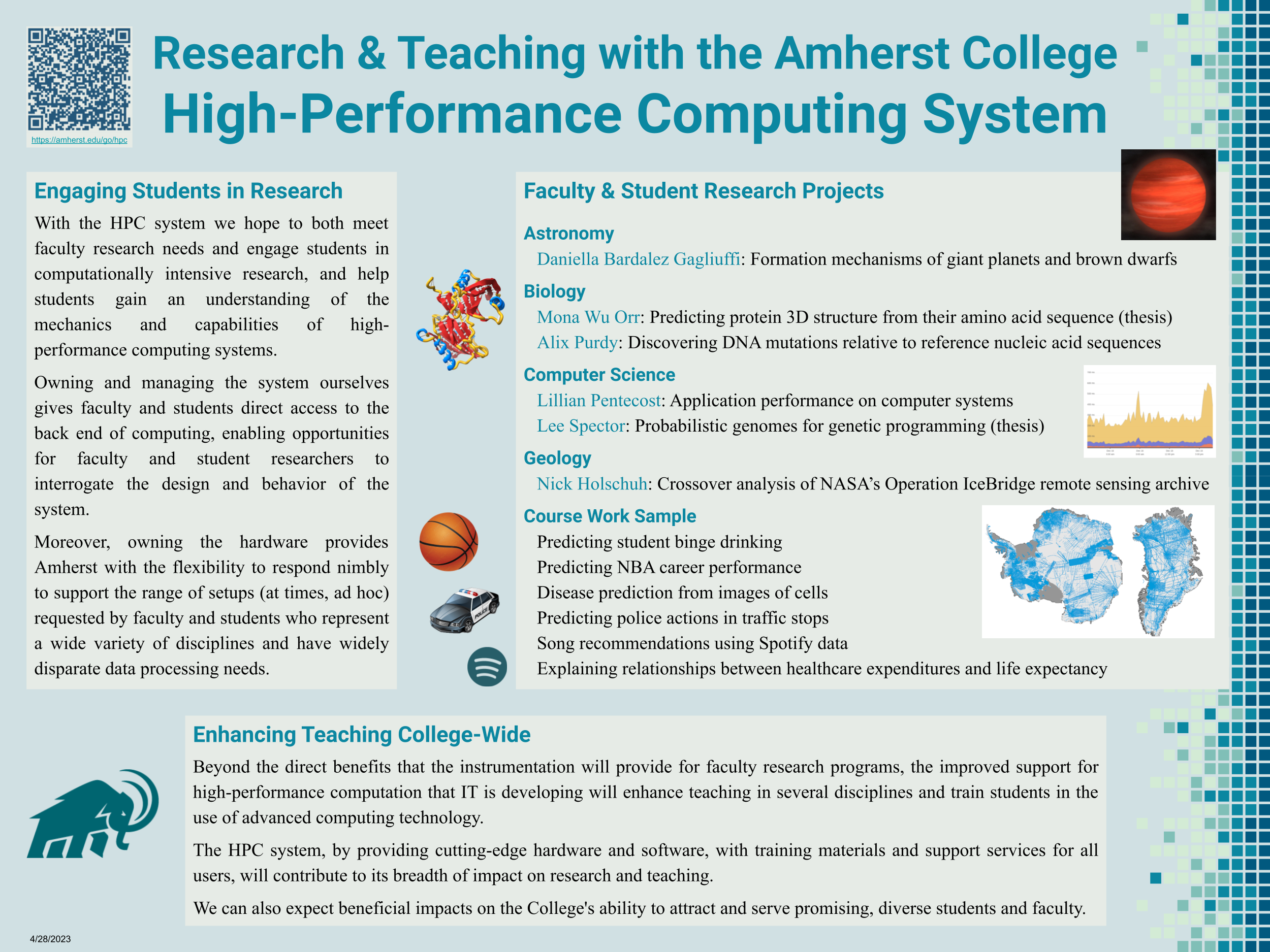

In August 2021, Amherst faculty members Lee Spector (Computer Science) and Amy Wagaman (Mathematics and Statistics) successfully received a National Science Foundation grant for a new high-performance computing (HPC) system, with an initial design based on vendor recommendations for the equipment that will meet the research and teaching needs of our faculty. The College provided additional support including funding for staffing. IT staff consulted with several vendors to get the best deal within our budget, and concluded the purchase in April 2022.

To oversee the grant an informal HPC Advisory group was formed, now consisting of Principal Investigators Spector and Wagaman, Faculty Computing Committee representative Lillian Pentecost (Computer Science), Associate Chief Information Officer Fred Kass, Director of Systems and Networking Stefan Antonowicz, Director of Technology for Curriculum and Research Jaya Kannan, HPC Administrator Steffen Plotner, and Senior Academic Technology Specialist Andy Anderson. The group meets regularly to discuss equipment details, implementation, and steps forward.

The Massachusetts Green High-Performance Computing Center in Holyoke, alongside a former power canal, now replaced by a hydroelectric dam on the Connecticut River, providing 98% of the MGHPCC’s power.

IT staff arranged to situate the system at the Massachusetts Green High-Performance Computing Center (MGHPCC) in nearby Holyoke, a dedicated HPC facility with LEED Platinum certification that is shared by the University of Massachusetts, Harvard University, the Massachusetts Institute of Technology, Boston University, Northeastern University, the University of Rhode Island — and now Amherst College. With a high-speed connection to campus through the Five College Fiber Optic Network, our user experience is similar to a local installation.

The HPC system became available for use in November 2022, and you can learn more about using it here (requires an on-campus connection). If you are interested in receiving more general information about the system please contact the principal investigators, Lee Spector and Amy Wagaman.

Amherst College High-Performance Computing System Specifications